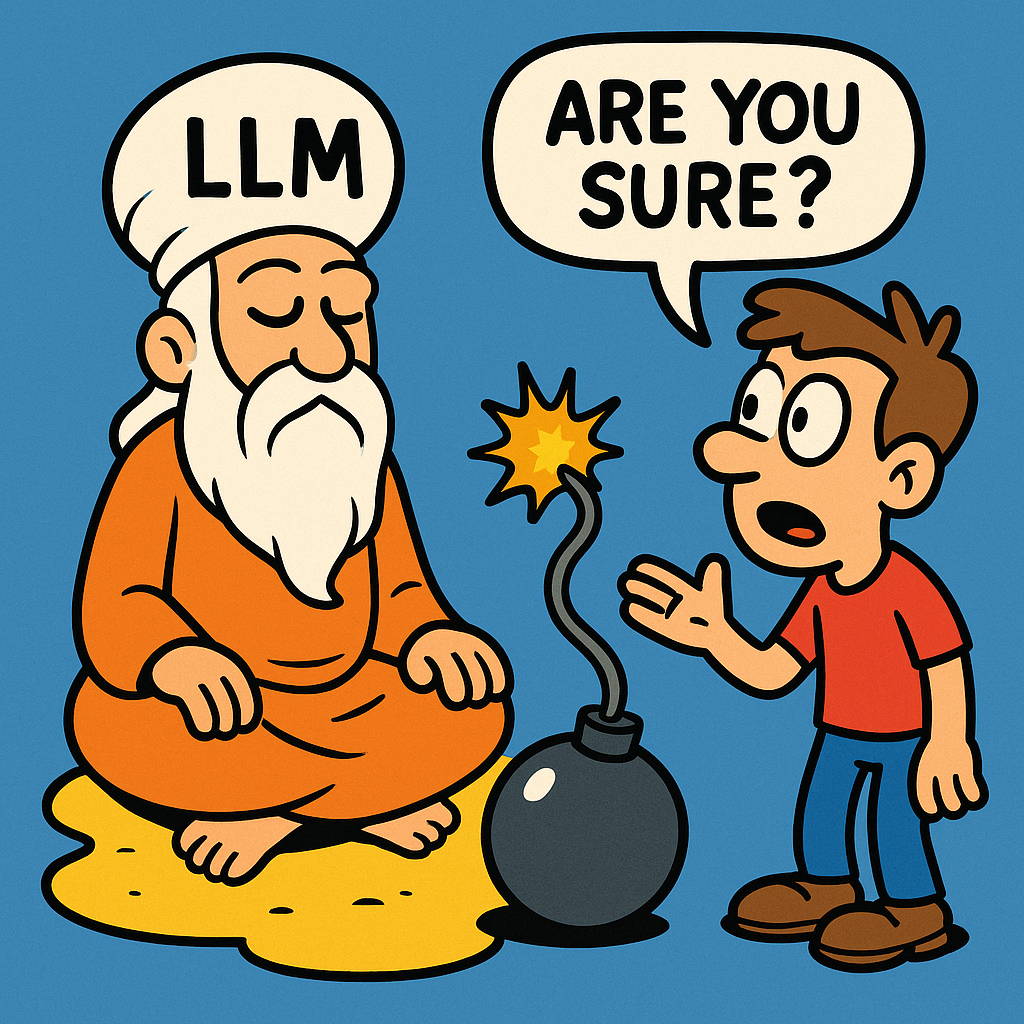

Making Decisions in the Face of Uncertainty—Again.

This is not the first time that I am encountering decisioning in the presence of uncertainty. At TruU we use various techniques (IP protected) to make reliable decisions in the presence of errors in Deep and traditional ML models. In addition, my...